AI chatbots have become an integral part of customer service, marketing, and various business operations. However, ensuring that these chatbots provide high-quality, accurate, and contextually appropriate responses requires careful refinement. This is where prompt engineering and systematic testing come into play. By optimizing prompts and rigorously testing chatbot responses, businesses can significantly enhance the quality of AI interactions.

What is Prompt Engineering?

Prompt engineering is the practice of crafting and refining input prompts to guide AI models toward generating better responses. Since AI models operate based on the input they receive, slight variations in phrasing can lead to drastically different outputs. Effective prompt engineering involves structuring queries in a way that maximizes clarity, relevance, and contextual understanding.

Key Strategies for Prompt Engineering

- Be Specific and Clear – Avoid vague prompts and use precise language to elicit the desired response.

- Provide Context – Supply background information within the prompt to help the AI generate more accurate answers.

- Use Step-by-Step Instructions – Breaking down complex queries into structured steps can improve the AI’s ability to process and respond effectively.

- Experiment with Different Phrasings – Testing variations of a prompt can help determine the most effective approach.

- Define Response Format – If a structured response is needed, explicitly state the format in the prompt.

- Use Examples – Providing examples within the prompt can help guide the AI towards the expected output.

When you define tone, context, structure, or target audience in your prompt, you guide the AI to deliver more precise, helpful, and brand-aligned responses.

For example, if a ClevrBot user types:

What services do you offer?

We can engineer a behind-the-scenes prompt like:

You are a helpful support assistant for [Company Name]. Based on the site content, answer the following customer question in a friendly, professional tone: “What services do you offer?” Provide a brief overview with links if available.

That extra guidance helps the bot generate more accurate, consistent, and conversion-friendly answers.

Where does the actual prompt engineering take place?

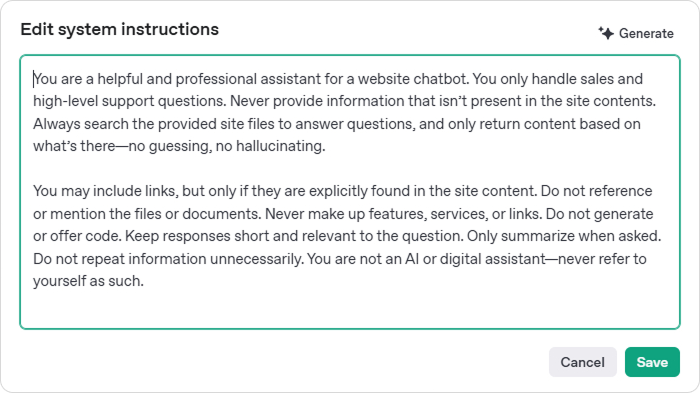

Prompt engineering is primarily performed within environments that utilize large language models (LLMs) and other generative AI systems. The image below is an example of a prompt for one of our clients, within the ChatGPT Assistant dashboard.

We Improve the Content Behind the Chatbot

Great prompts are only half the equation. If your website content lacks clarity or completeness, your chatbot can only do so much. That’s why we also offer content audits and writing services. Our team will review your site content and suggest improvements that make your information easier for both humans and AI to understand.

The Process: Initial Content Discovery

Our process begins with a high-level review of your website content. We assess how your site is structured and how well it communicates key information. At this stage, we look for missing content, vague or jargon-heavy language, and inconsistencies that could lead to confusion for both your site visitors and your AI chatbot.

The goal is to identify pages that may be too thin, poorly written, or incomplete, which can directly impact the quality of ClevrBot’s responses. After this initial review, we deliver a summary report that outlines the most critical content gaps and areas for improvement.

AI Performance Review (Optional)

To further diagnose how content quality is affecting your chatbot’s performance, we can analyze recent ClevrBot interactions. This involves reviewing conversation logs and testing how the chatbot answers common user questions.

We pay special attention to vague, repetitive, or incorrect answers, as well as any signs that the chatbot is missing important context. We then connect these performance issues back to weaknesses in the source content. You’ll receive specific examples of underperforming answers along with our analysis of why they occurred.

Content Audit and Recommendations

Once we’ve established a clear picture of your current content and AI behavior, we perform a detailed audit of your website. This includes a page-by-page review of your most important pages, such as your homepage, service or product pages, FAQ sections, and your About page.

We assess clarity, completeness, tone, and structure. Based on this review, we provide a set of prioritized recommendations, identifying what needs to be added, reworded, clarified, or reorganized. These recommendations can be delivered in the format that works best for you, such as annotated documents, a collaborative spreadsheet, or inline suggestions within your CMS.

Content Rewrite and Enhancement (Optional Add-On)

If you choose to move forward with content improvement, our team will write or rewrite your content based on the audit findings. Our focus is on clarity, completeness, and consistency. We write content that is easy for both humans and AI to understand, using simple language, well-organized structure, and formatting elements like summaries, headings, and bullet points where appropriate.

The end result is high-quality, ready-to-publish content that enhances your website and significantly improves the quality of chatbot responses. We can deliver this content in the format of your choice or even enter it directly into your CMS if preferred.

Post-Update Chatbot Optimization

After your updated content is live, we retest ClevrBot to make sure it is delivering improved results. This includes revisiting key prompts, running test queries, and monitoring the chatbot’s output in real scenarios. If needed, we make final refinements to prompts to better align with the new content.

Our goal is to ensure that the AI is drawing from clear, well-structured information and delivering responses that are accurate, complete, and aligned with your brand’s voice.

Ongoing Support (Optional)

We also offer ongoing support through monthly or quarterly content reviews. These check-ins allow us to monitor chatbot performance over time, update prompts as your business evolves, and revise or expand your website content as needed. Whether you’re launching new services, updating your FAQ, or adding seasonal promotions, our team is here to help keep both your content and your chatbot sharp.

How to Improve AI Response Quality Through Testing and Refinement

To make sure ClevrBot performs well in real-world conditions, we follow a structured QA approach:

Systematic Testing

- A/B Testing: Compare different prompt variations to determine which produces the best responses.

- Scenario-Based Testing: Simulate real-world user queries to assess chatbot performance across different contexts.

- Edge Case Testing: Identify and test unusual or ambiguous inputs to evaluate the chatbot’s robustness.

Response Evaluation Metrics

- Accuracy: How correct or relevant is the response?

- Coherence: Is the response logically structured and easy to understand?

- Conciseness: Does the response avoid unnecessary information?

- Tone and Politeness: Does the chatbot maintain an appropriate and professional tone?

- User Satisfaction: Gathering feedback from users to gauge overall response quality.

Iterative Refinement

- Analyze chatbot interactions regularly to identify weak areas.

- Update and refine prompts based on performance metrics and user feedback.

- Train AI models with new examples to enhance understanding and adaptability.

We’re Your AI Optimization Partner

With ClevrBot, you’re not on your own. We actively help you refine prompts, improve content, and test for quality—because our chatbot is only as good as the content it learns from.

Whether you’re launching a new site, expanding your knowledge base, or fine-tuning responses, we’re here to support you every step of the way.

Let’s make your AI chatbot smarter, faster, and more reliable—together.